neural networks and deep learning coursera week 4 quiz answers

Quiz - Key Concepts on Deep Neural Networks

1. We use the "cache" in our implementation of forward and backward propagation to pass useful values to the next layer in the forward propagation. True/False?

- False

- True

2. Among the following, which ones are "hyperparameters"? (Check all that apply.)

- size of the hidden layers n^[l]

- learning rate a(alpha)

- number of layers L in the neural network

- activation values a^[l]

- weight matrices W^[l]

- number of iterations

- bias vectors b^[l]

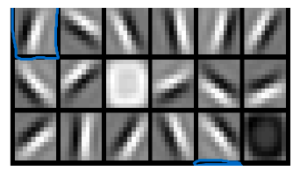

3. Which of the following is more likely related to the early layers of a deep neural network?

- Figure 1:

- Figure 2:

- Figure 3:

4. Vectorization allows you to compute forward propagation in an L-layer neural network without an explicit for-loop (or any other explicit iterative loop) over the layers l=1, 2, …,L. True/False?

- False

- True

5. Assume we store the values for n^[l] in an array called layer_dims, as follows: layer_dims = (nx, 4,3,2,1]. So layer 1 has four hidden units, layer 2 has 3 hidden units, and so on. Which of the following for-loops will allow you to initialize the parameters for the model?

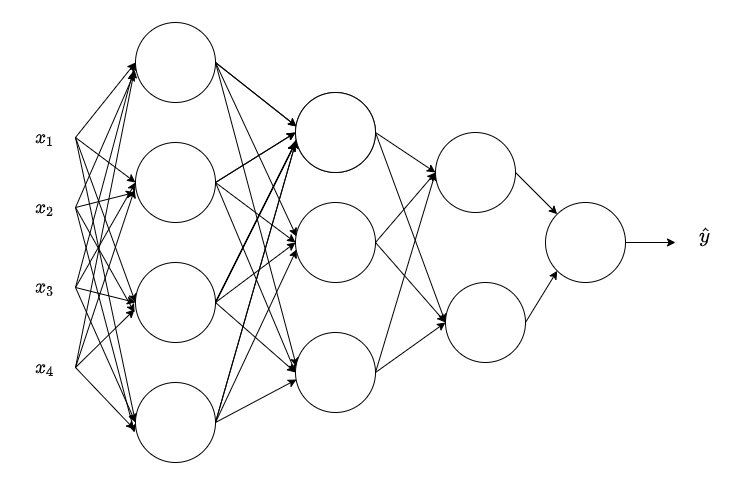

6. Consider the following neural network:

What are all the values of n^[0], n^[1], n^[2], n^[3] and n^[n]?

- 4, 4, 3, 2

- 4, 4, 3, 2, 1

- 4, 3, 2

- 4, 3, 2, 1

7. During forward propagation, for the value of A^[l] the value is used of Z^[l] with the activation function g^[l]. During backward propagation we calculate dA6[l] from Z^[l].

- False

- True

8. For any mathematical function you can compute with an L-layered deep neural network with N hidden units there is a shallow neural network that requires only logN units, but it is very difficult to train.

- True

- False

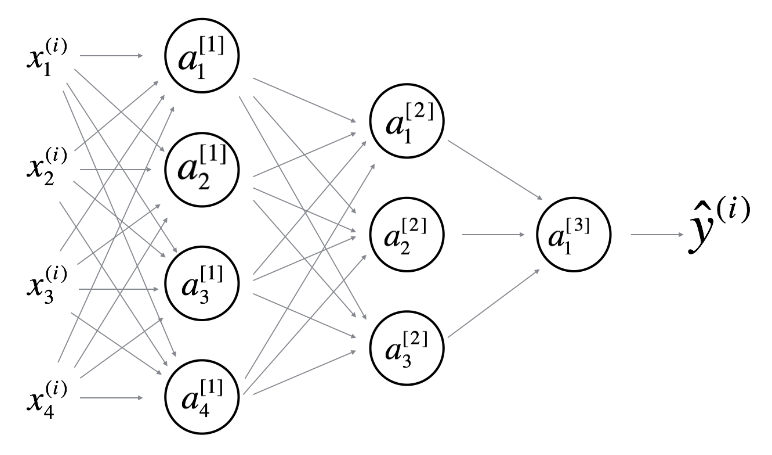

9. Consider the following 2 hidden layer neural network:

Which of the following statements are True? (Check all that apply).

- b^[2] will have shape (3, 1)

- b^[3] will have shape (1, 1)

- W^[1] will have shape (3, 4)

- b^[1] will have shape (3, 1)

- W^[1] will have shape (4, 4)

- W^[2] will have shape (3, 1)

- W^[3] will have shape (3, 1)

- b^[3] will have shape (3, 1)

- b^[2] will have shape (1, 1)

- W^[2] will have shape (3, 4)

- W^[3] will have shape (1, 3)

- b^[1] will have shape (4, 1)