advanced learning algorithms coursera week 2 answers

Practice quiz: Neural Network Training

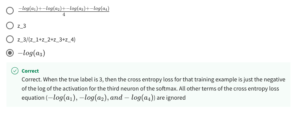

1. Here is some code that you saw in the lecture:

```

model.compile(loss=BinaryCrossentropy())

```

For which type of task would you use the binary cross entropy loss function?

- BinaryCrossentropy() should not be used for any task.

- A classification task that has 3 or more classes (categories)

- regression tasks (tasks that predict a number)

- binary classification (classification with exactly 2 classes)

2. Here is code that you saw in the lecture:

```

model = Sequential([

Dense(units=25, activation='sigmoid’),

Dense(units=15, activation='sigmoid’),

Dense(units=1, activation='sigmoid’)

])

model.compile(loss=BinaryCrossentropy())

model.fit(X,y,epochs=100)

```

Which line of code updates the network parameters in order to reduce the cost?

- model.fit(X,y,epochs=100)

- model.compile(loss=BinaryCrossentropy())

- None of the above — this code does not update the network parameters.

- model = Sequential([…])

Practice quiz: Activation Functions

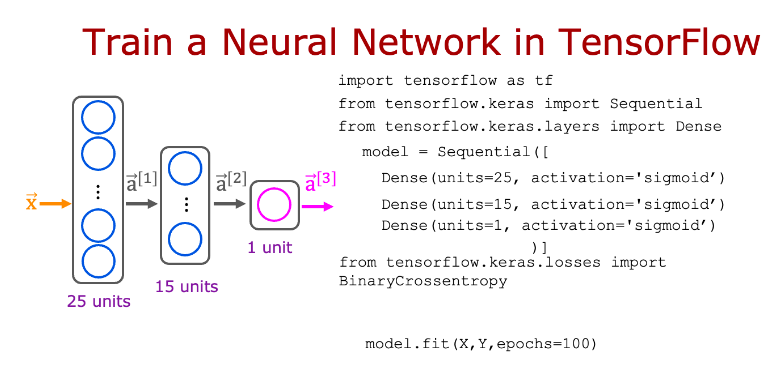

3. Which of the following activation functions is the most common choice for the hidden layers of a neural network?

- Linear

- ReLU (rectified linear unit)

- Sigmoid

- Most hidden layers do not use any activation function

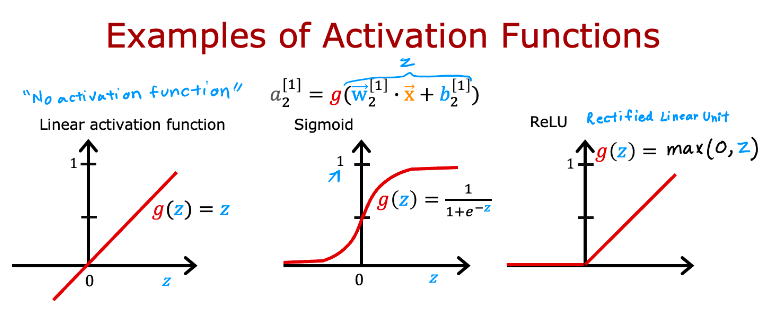

4. For the task of predicting housing prices, which activation functions could you choose for the output layer? Choose the 2 options that apply.

- Sigmoid

- linear

- ReLU

5. True/False? A neural network with many layers but no activation function (in the hidden layers) is not effective; that’s why we should instead use the linear activation function in every hidden layer.

- True

- False

Practice quiz: Multiclass Classification

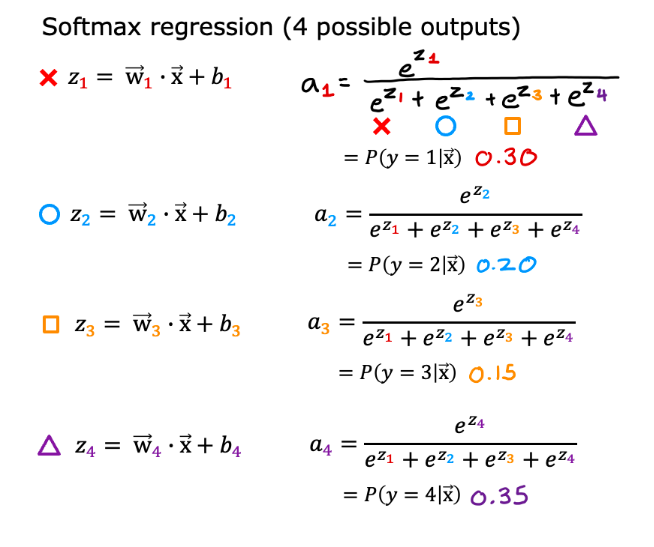

6. For a multiclass classification task that has 4 possible outputs, the sum of all the activations adds up to 1. For a multiclass classification task that has 3 possible outputs, the sum of all the activations should add up to ….

- Less than 1

- More than 1

- 1 (Correct Answer)

- It will vary, depending on the input x.

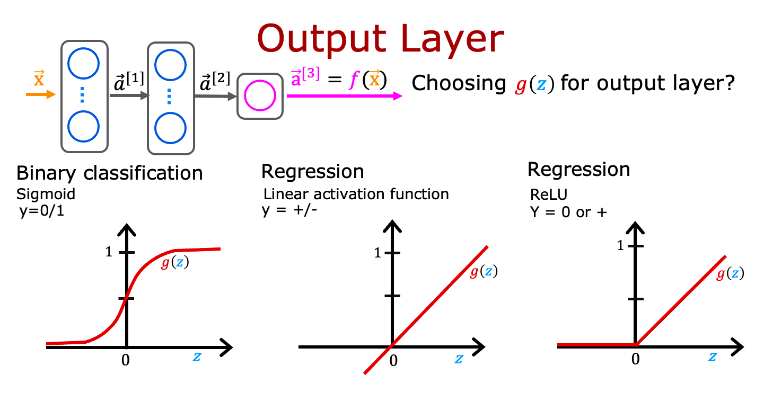

7. For multiclass classification, the cross entropy loss is used for training the model. If there are 4 possible classes for the output, and for a particular training example, the true class of the example is class 3 (y=3), then what does the cross entropy loss simplify to? [Hint: This loss should get smaller when a 3 gets larger.]

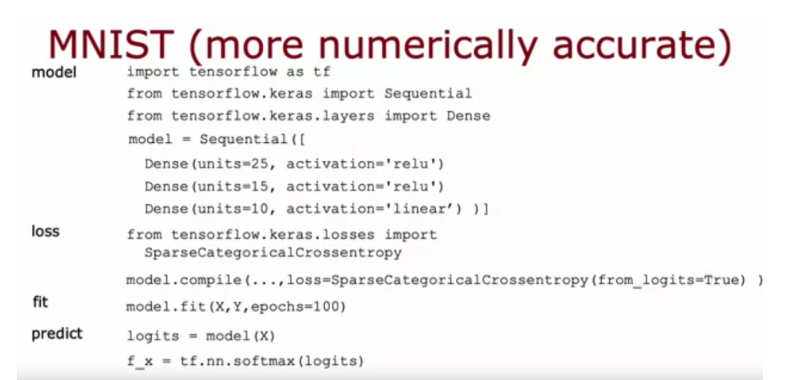

8. For multiclass classification, the recommended way to implement softmax regression is to set from_logits=True in the loss function, and also to define the model's output layer with…

- a ‘linear’ activation

- a ‘softmax’ activation

Practice quiz: Additional Neural Network Concepts

9. The Adam optimizer is the recommended optimizer for finding the optimal parameters of the model. How do you use the Adam optimizer in TensorFlow?

- The Adam optimizer works only with Softmax outputs. So if a neural network has a Softmax output layer, TensorFlow will automatically pick the Adam optimizer.

- When calling model.compile, set optimizer=tf.keras.optimizers.Adam(learning_rate=1e-3).

- The call to model.compile() will automatically pick the best optimizer, whether it is gradient descent, Adam or something else. So there’s no need to pick an optimizer manually.

- The call to model.compile() uses the Adam optimizer by default

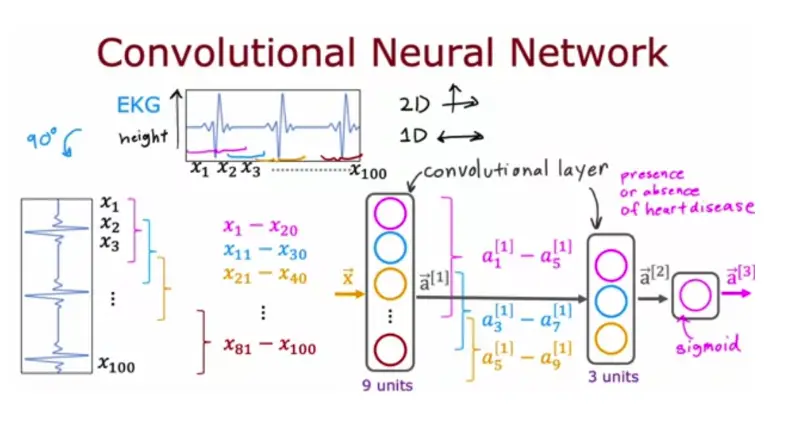

10. The lecture covered a different layer type where each single neuron of the layer does not look at all the values of the input vector that is fed into that layer. What is this name of the layer type discussed in lecture?

- A fully connected layer

- Image layer

- convolutional layer

- 1D layer or 2D layer (depending on the input dimension)