sequence model coursera week 2 quiz answers

Quiz - Natural Language Processing & Word Embeddings

1. Suppose you learn a word embedding for a vocabulary of 10000 words. Then the embedding vectors could be 10000 dimensional, so as to capture the full range of variation and meaning in those words.

- True

- False

2. What is t-SNE?

- A supervised learning algorithm for learning word embeddings

- A linear transformation that allows us to solve analogies on word vectors

- A non-linear dimensionality reduction technique

- An open-source sequence modeling library

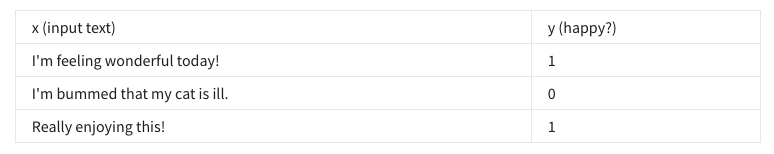

3. Suppose you download a pre-trained word embedding which has been trained on a huge corpus of text. You then use this word embedding to train an RNN for a language task of recognizing if someone is happy from a short snippet of text, using a small training set.

True/False: Then even if the word “upset” does not appear in your small training set, your RNN might reasonably be expected to recognize “I’m upset” as deserving a label y = 0.

- False

- True

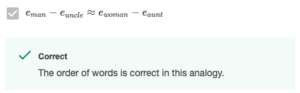

4. Which of these equations do you think should hold for a good word embedding? (Check all that apply)

5. Let A be an embedding matrix, and let 04567 be a one-hot vector corresponding to word 4567. Then to get the embedding of word 4567, why don't we call A * 04567 in Python?

- It is computationally wasteful.

- The correct formula is AT * 04567

- None of the answers are correct: calling the Python snippet as described above is fine.

- This doesn’t handle unknown words (<UNK>).

6. When learning word embeddings, we pick a given word and try to predict its surrounding words or vice versa.

- True

- False

7. In the word2vec algorithm, you estimate P(t | c), where t is the target word and c is a context word. How are t and c chosen from the training set? Pick the best answer.

- c is the one word that comes immediately before t

- c and t are chosen to be nearby words.

- c is the sequence of all the words in the sentence before t

- c is a sequence of several words immediately before t

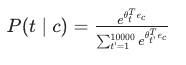

8. Suppose you have a 10000 word vocabulary, and are learning 100-dimensional word embeddings. The word2vec model uses the following softmax function:

True/False: After training, we should expect O, to be very close to e, when t and care the same word.

- False

- True

9. Suppose you have a 10000 word vocabulary, and are learning 500-dimensional word embeddings. The GloVe model minimizes this objective:

True/False: X; is the number of times word j appears in the context of word i.

- True

- False

10. You have trained word embeddings using a text dataset of m1 words. You are considering using these word embeddings for a language task, for which you have a separate labeled dataset of my words. Keeping in mind that using word embeddings is a form of transfer learning, under which of these circumstances would you expect the word embeddings to be helpful?

- m1 << m2

- m1 >> m2