improving deep neural networks hyperparameter tuning regularization and optimization week 3 quiz answers

Quiz - Hyperparameter tuning, Batch Normalization, Programming Frameworks

1. Which of the following are true about hyperparameter search?

- Choosing random values for the hyperparameters is convenient since we might not know in advance which hyperparameters are more important for the problem at hand

- Choosing values in a grid for the hyperparameters is better when the number of hyperparameters to tune is high since it provides a more ordered way to search.

- When using random values for the hyperparameters they must be always uniformly distributed

- When sampling from a grid, the number of values for each hyperparameter is larger than when using random values.

2. In a project with limited computational resources, which three of the following hyperparameters would you choose to tune? Check all that apply.

- a(alpha)

- mini-batch size

- The b(beta) parameter of the momentum in gradient descent

- € in Adam

- b1,b2 in Adam.

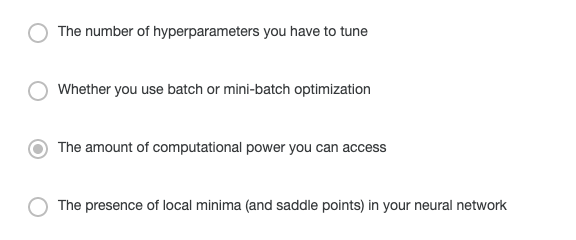

3. During hyperparameter search, whether you try to babysit one model (“Panda” strategy) or train a lot of models in parallel (“Caviar”) is largely determined by:

4. Knowing that the hyperparameter α should be in the range of 0.001 0.001 and 1.0 1.0. Which of the following is the recommended way to sample a value for α?

5. Finding good hyperparameter values is very time-consuming. So typically you should do it once at the start of the project, and try to find very good hyperparameters so that you don’t ever have to tune them again. True or false?

- False

- True

6. In batch normalization as presented in the videos, if you apply it on the lth layer of your neural network, what are you normalizing?

- W^[l]

- b^[l]

- z^[l]

- a^[l]

7. Which of the following are true about batch normalization?

- There is a global value of y and B that is used for all the hidden lavers where batch normalization is used.

- The parameters B and y of batch normalization can’t be trained using Adam or RMS prop.

- The parameter e in the batch normalization formula is used to accelerate the convergence of the model.

- One intuition behind why batch normalization works is that it helps reduce the internal covariance

8. Which of the following is true about batch normalization?

9. A neural network is trained with Batch Norm. At test time, to evaluate the neural network we turn off the Batch Norm to avoid random predictions from the network. True/False?

- True

- False

10. If a project is open-source, it is a guarantee that it will remain open source in the long run and will never be modified to benefit only one company. True/False?

- False

- True