sequence model coursera week 1 quiz answers

Quiz - Recurrent Neural Networks

1. Suppose your training examples are sentences (sequences of words). Which of the following refers to the jth word in the it training example?

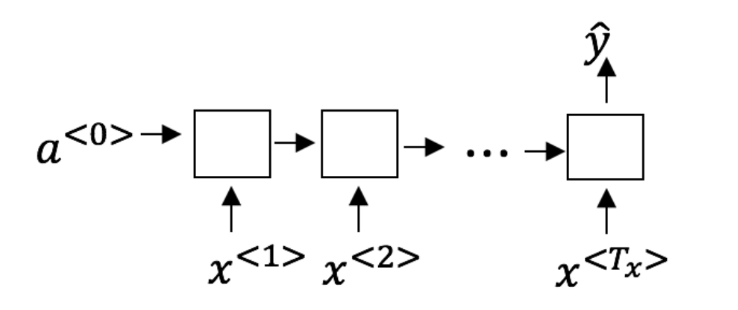

2. Consider this RNN:

True/False: This specific type of architecture is appropriate when Tx>Ty

- True

- False

3. To which of these tasks would you apply a many-to-one RNN architecture? (Check all that apply).

- Speech recognition (input an audio clip and output a transcript)

- Sentiment classification (input a piece of text and output a 0/1 to denote positive or negative sentiment)

- Image classification (input an image and output a label)

- Gender recognition from speech (input an audio clip and output a label indicating the speaker’s gender)

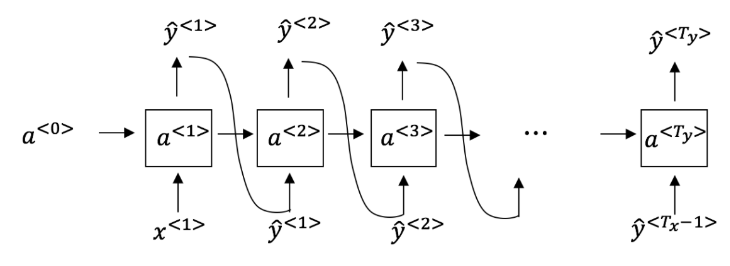

5. You have finished training a language model RNN and are using it to sample random sentences, as follows:

What are you doing at each time step t?

6. True/False: If you are training an RNN model, and find that your weights and activations are all taking on the value of NaN (“Not a Number”) then you have a vanishing gradient problem.

- True

- False

7. Suppose you are training an LSTM. You have a 10000 word vocabulary, and are using an LSTM with 100-dimensional activations a . What is the dimension of I' u at each time step?

- 1

- 100

- 300

- 10000

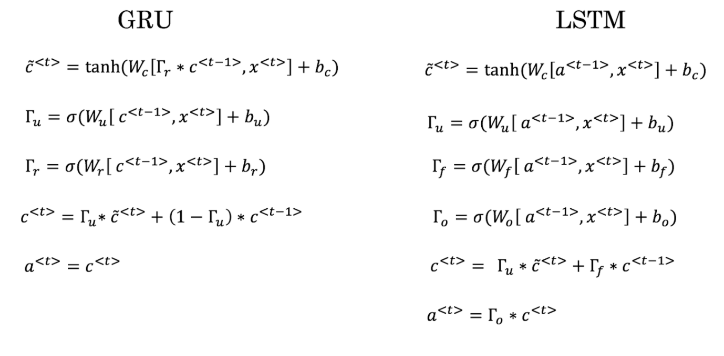

8. Sarah proposes to simplify the GRU by always removing the Γu. I.e., setting Γu = 0. Ashely proposes to simplify the GRU by removing the Γr. I. e., setting Γr= 1 always. Which of these models is more likely to work without vanishing gradient problems even when trained on very long input sequences?

9. True/False: Using the equations for the GRU and LSTM below the Update Gate and Forget Gate in the LSTM play a different role to Γu and 1- Γu.

- False

- True

10. True/False: You would use unidirectional RNN if you were building a model map to show how your mood is heavily dependent on the current and past few days’ weather.

- True

- False